In the fall of 02007, a friend Kristján and I met over dinner with one of his colleagues, Almar, where we discussed the possibilities of creating a simple web-based surveying software for schools in Iceland. Between the three of us, we each had a level of expertise which could make this project work. Both Kristján and Almar work in the education field on various aspects of testing, teaching and learning for many years, while I had been programing for the web.

We decided to move forward and called the project Skólapúlsinn, which is Icelandic for School Pulse. The concept behind Skólapúlsinn is rather than have a single large test sample once a year, many smaller test samples should be taken continuously through-out the entire school year. Currently, Iceland takes part in the PISA test, which 10th graders take in late May. It assesses how well students have acquired the knowledge and skills essential for full participation in society. Many of the same questions and themes from PISA are reflected in Skólapúlsinn.

We decided to move forward and called the project Skólapúlsinn, which is Icelandic for School Pulse. The concept behind Skólapúlsinn is rather than have a single large test sample once a year, many smaller test samples should be taken continuously through-out the entire school year. Currently, Iceland takes part in the PISA test, which 10th graders take in late May. It assesses how well students have acquired the knowledge and skills essential for full participation in society. Many of the same questions and themes from PISA are reflected in Skólapúlsinn.

There are two major drawbacks to holding a test like PISA only once a year in May, the first being that a single data-point, even with a large group, could easily be swayed if there were any sort of anomaly. Perhaps a tragedy might have struck which impacted the entire 10th grade? The schools “average” would reflect only the outlier rather than the past 9 months. The second major drawback of a May test is that by the time the results are analyzed and returned, school is finished and the students are away on summer vacation. If it turns out there was a major problem with any of the measured scales, it is too late to correct the issues. For instance, learning that there was a truancy problem in the last 9 months doesn’t really make it better since the time to intervene has passed.

Skólapúlsinn attempts to negate these effects by sampling more frequently, thus minimizing any anomalous events, good or bad, and giving a “pulse” to the school on a monthly basis. The school faculty is allowed to interpret and detect issues within the school year. One of the consequences that falls from this is that the schools get to register a baseline result. Without a data point, there is no empirical way that each month can be compared to others. Having a baseline allows a school to know where they stand, so when introducing new initiatives to improve student life, it is possible to see if they are having the intended effects.

As we drafted the project, we decided on a few basic ground rules. Many of which were completely contrary to many of my Web 2.0 ideologies and experiences. Any and all of the data we collect is never turned over to the schools. This is complete vendor-lock-in, the minute they stop paying us, they lose the data. At first, I was very much against this idea, for several reasons: the schools have paid, it is their data and secondly we don’t have the time to grow a business while at the same time chasing silly requests from schools to analyze and interpret the results for them. If they had the raw numbers, they can do with it what they want. The goal of any product should be to make your customers self-sufficient and empower them to act autonomously.

The single reason turning over the data to the schools is impossible is Icelandic data protection laws. This is really the first time I have been on the other-side of the fence. When I use my email address or give my credit card to an online merchant, I want them to be liable when protecting my sensitive data—Skólapúlsinn is now one of those companies. We are collecting sensitive, personal data from and about the students. As a company, we are bound NOT to turn over that data and break the anonymity and trust of the students. Students will only be truthful in their answers when the are guaranteed it will not become public.

As a company, Skólapúlsinn had to deal with the proper government agencies and comply with Icelandic laws regarding the collection and storage of personal information. We had to remove a few of the questions to meet compliance. Originally, there were several questions dealing with drinking and smoking. These types of questions are considered much more personal and would have put us into a stricter category, since it is technically illegal for students to engage in those activities. Strangely, question about mental health, such as depression, were acceptable to ask and collect, but not substance abuse and physical health. After tweaking the questions and arranging for hosting within the country at the University of Iceland, we fully complied with all the regulation. Our ticket number for persónuvernd.is is S3945 (in Icelandic)

After several meetings around the dinner table and copious amounts of tea and coffee, we launched the project in August 02008 with around 30 schools in Iceland participating. Without additional funding, we developed the project further to meet our customers’ needs collecting data all along the way.

How it works

When a school joins Skólapúslinn, the only requirement is that they have over 40 students total between the grades 6–10. This includes approximately 120 schools within Iceland. Each month the system generates a random cross-sampling of 40 students from each school and only require 80%, or 32, to complete the survey. This was enough to keep the results anonymous and still collect enough data when averaged. We always pull 40 which is more students than strictly necessary, because some might be sick the day they were to be tested, refused to participate, have switched schools, got tired and never completed the survey or any number of other excuses. Having 1-2 student not complete the survey should not invalidate the entire effort, therefore we built in a 20% buffer.

At the beginning of each school year, the school sends home a letter to all the parents of eligible students explaining the project and letting them know their students are enrolled. If they do not want their child to take part, they can send the letter back and we remove them from the system.

Next, we divided the total number of 6th–10th grade students in each school by 40 to determine the number of test months they would participate. If a school has 110 students, that’s 110/40 ~= 2 test months. The months were chosen to be symmetrical between the two semesters. A school with two test months would take a test of 40 random students in October and 40 different random students in April, allowing for the school to get an average over the whole year representing fall and spring semesters evenly. The larger the school, the more test months they would take part in, up to nine test months. In order not to weight any one school more than another, if a school had more than 9 test months, meaning more than 360 students, any extra students were ignored and never pulled. A school of 400 students would still only have 9 test months with 40 random students never participating.

We also engage in proportional random pulling. This means that if the 10th grade was twice as big, each month twice as many 10th graders were randomly selected. At the end of the school year, all the grades end with a similar amount of students left not pulled. This prevents randomly testing more 10th graders early in the school year and depleting them before the end months and skewing the averages. Both grade and gender are proportionally randomly pulled.

For schools that had between 40 and 80 students, we created an exception. Since there was 5 months between their first and second surveys, we could pull as many un-tested students as possible the second time, then fill it the rest of the way with random students who had already been tested. We need to do this to keep the number above the 32 mark to protect anonymity. This allows Skólapúlsinn to help schools with as few as 40 students, which expands our client-base as well as strengthening the national average by representing all types of schools large and small.

At the start of each month the software generates random, one-time anonymous access codes for each of the 40 students who have been selected. These are sent to the school coordinator which prints out the list and cuts them into strips. Each strip has the students name, national id number and their access code. The students login and are presented with some brief instructions and an explanation about how the data is collected and anonymously used. When the survey is finished, the software locks the code and it is no longer usable, but during the survey the students can go back and forth to change and update their answers. If a student does not participate the code self-expires at the end of the test month.

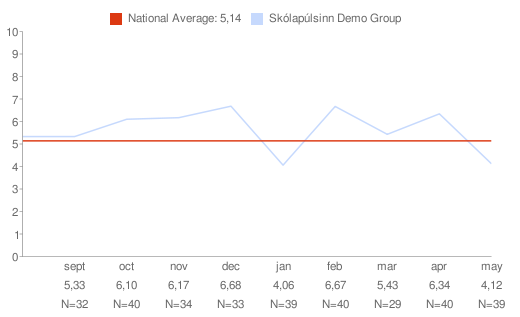

The survey consists of 21 different nationally tested psychometric scales. Each scale have approximately 5–7 questions which are multiple choice with 4 answers: strongly disagree, disagree, agree and strongly agree. We use 4 possible answers to force people into choosing, if we had a 5th middle choice, students would not need to take stand on the agree or disagree side. All of the questions are scored and a mean average for each student is generated, which in turn is summed and averaged for all students taking the survey that month and a single data point representing the school for that month is created. The school’s rank is plotted on a scale of 1–10. This alone doesn’t tell you much, so we added a national average based on all students in all the schools who have taken the survey. As more and more students take the survey, our national average value becomes more and more precise. This allows the schools to compare where they rank to this national average. We now have one year of data covering all the highs and lows in the school year from which a solid national average will be computed and used for the following school year.

All of the questions used in the survey come from previously tested scales in Iceland, some are from PISA, others are from the health institute and other government organizations. These have all been tested on a wide range of students and have a strong result for us to compare against when generating our scaling factors. The 21 scales are grouped into three major categories which are: Student engagement in School, Student Health & Wellness and School and Classroom Climate.

Each of the 21 scales used are fit to a bell curve distribution. When we plot the frequency of the averages for all the individual students it creates a standard bell shape. This is critical for our next step. Along with creating an average for each scale, we computed the standard deviation and variance. To know that your school scores a 6.0 on the first scale when the national average is 5.4 only tells you that you are better than average, but not by how much. It could be that 1 school scored a 1 out of 10 therefore bringing the national average down, when in reality most schools are actually better than the 6.0 your school achieved. By computing the standard deviation for each test month, it is possible to say that you got a 6.0 with a 90% confidence that is significantly above average or below the national average. This is important, because a failing school might have a 5.4 data point against a national average of 5.6 and they might think, “this isn’t so bad, just 0.2 away”. When in reality, most schools fall in between 5.58 and 5.7 making the 5.4 a significant distance away! Values which seem small, might actually be very significant.

Finally, at the end of the survey, there are two open-ended questions which the schools have the opportunity to change. The default questions are: What do you like about your school? and What do you dislike about your school? This has turned out to be an important part of the overall survey, because it allows students an anonymous voice to let the faculty and school know about issues. The answers to these open-ended questions could possibly explain any strange results in the 21 scales. If Student Well Being unexpectedly increases one month and the open-ended questions all praise the new food in the cafeteria, it could help connect a causal relationship and lead to more improvements.

Each school’s principal has a login to an administrative panel which contains graphs for all of the results. A month by month breakdown of each test for each scale along with answers to all of the open-ended questions. At the end of the year, we also generate a single PDF document of all the scales so it can easily be printed for their reference. Each school in Iceland is obligated to create a report for their district office. All of the results from Skólapúlsinn can be used to supplement this report. Each year’s data is archived and is available to the schools so they can watch for longitudinal developmental trends over multiple years. We created a Demo Skólapúlsinn admin for anyone to see a sample of the breakdown we present to each school.

Unexpected Consequences

Within the first year of running the project, we had at least two interesting cases in which Skólapúlsinn was used. The first dealt with a rash of school bullying stories in the news. Several schools were singled out where students were claiming to have been bullied and how the school had a culture of bullying. Some of these schools where members of Skólapúlsinn, so we put in a courtesy call and reminded them that they had strong statistically measured questions and results dealing with school bullying. They could confirm for themselves, via the survey questions, if there really was a problem and how far above or below the national average they actually were. Some of these results were used to debunk the bullying witch-hunt the local TV stations had started.

One of the other factors that is tracked within the survey is discipline in the classroom. Once school noticed that they were below average in this category. Firstly, without Skólapúlsinn, they would have had no idea where they stood in relation to any other school, because it is not possible to compare two schools to each other—there was no baseline. After collecting a few months worth of data, it was clear it was not an anomaly, so over Christmas break, the faculty sat down and worked out a plan to deal with the situation in an attempt to improve it. Again, this is a difficult thing to qualitatively measure, “I think discipline is down” isn’t a measurable value, especially over a long break it’s difficult to compare your memory 30+ days ago with what is happening today. Luckily, Skólapúslinn is designed to take qualitative values and turn them into quantitative, measurable values. Since it is a random cross-sampling of students each month, the margin of error due to random anomalies is minimized and therefore what happened in November and December can be compared to January and February and a quantitative answer can be reached. It is possible to know numerically how much or little improvement was gained through the changes the school implemented. None of this was previously possible without small iterative surveys. The single 10th grade-wide survey conducted once a year would not reveal any information about the changes because there is no previous data points to compare too!

Both of these incidents are excellent byproducts of the survey. We have closed the information loop between the students and the faculty and it has allow for changes to be measured numerically to determine the success or failure rate of any new initiatives. Some of our next steps are to create correlations between parts of the survey, so schools can know to what degree Enjoyment of Reading is linked to Disciple in the Classroom.

One of the other byproducts of conducting a survey online rather than on paper, is that everything is time-stamped. On a paper survey, a student might finish in 5 minutes, then sit there for 20, or they might flip from page to page, and back, but this information is lost when they turn in the results. Our system records all of this metadata. It allows us to dismiss surveys done in impossible times as well as analyze the average time per question, per grade, per gender. We might find that one or two questions are extremely difficult for younger children to answer, they are spending far too long looking at the scale without answering, leading to potential misinterpretations of the question and skewing the data. Knowing this, we can improve and change the questions for the following years.

Dashboard

In the process of working on this project, we created a public dashboard exposing some simple facts and figures about our database. By the end of the first year of testing we had 33 schools enrolled in the system consisting of 5800+ students. This accounted for over 25% of the 6th–10th grade students in Iceland. 1 in 4 eligible students in the country were part of Skólapúlsinn. Most interesting to me, was the figure about how much paper was saved by conducting this survey online. We estimated to do a similar printed survey, the number of pages printed, put into envelopes, mailed to the office, collated then shredded would be over 50,000+ sheets of paper a year. It is a staggering amount of waste for a country with so few trees! By hosting the server in Iceland, it’s powered by geothermal energy and by not using 50,000+ sheets of paper we saved countless trees and shipping costs. Skólapúlsinn is very much a carbon friendly operation.

Further Development

This project has been run in a very “Agile” way. Not adhering strictly to the Agile development processes, but around the concept of small iterative changes, with a product backlog. There is a long list of features we and our customers would like to see in the system. We in turn arrange and organize that backlog based on value to our customer and ease in which we can code and publish the information. These are just some of the additions we are planning on implementing, some are very simple, others are giving more feedback to the schools and there are even a few attempts at using the web medium to our advantage and producing interactions not possible on paper.

In June 02009, Skólapúlsinn was awarded a grant from Tækniþróunarsjóður to continue development on the software for the 02009-02010 school year. Part of the plan is to translate the questions and interface into additional languages such as Danish, Norwegian, English and others to extend the software into additional markets allowing us to compare students and schools at the international level.

The major next steps in development are not directly in the software, but with the results. We are aiming to analyze the data to look for correlating questions. Possibly, the best way to increase interest in reading is NOT to work on reading initiatives, but to reduce depression. If these, or other factors, correlate, then effort spent improving one sector gives you benefits in others for free. Exploring and knowing these correlations can improve the school’s focus and create a better experience for the students.

The amount of data collected is staggering, over a half of a million individual answers in database, each telling us something. How this can be interpreted to improve student-life and the quality of schools in Iceland and abroad will take some time and careful research. Just recently, the Icelandic education sector has been discussing the 02008-02009 poor academic test results of the boys. It is easy to identify end-of-year grades and compare boys and girls, because of the discrepancy everyone is quick to rush new initiatives focusing on improving the grades of the boys. We looked at the results in Skólapúlsinn and saw trends the education sector doesn’t have access too. While girls might be scoring higher in academically, their self-esteem, anxiety, depression and other factors are much lower than the boys. If all of the focus is on improving grades for boys, these girls would suffer even further. School life is much more than just a report card and we are building the tools to identify, measure and improve it. This is just the beginning of a much deeper project which I am excited to be a part of and excited to see what comes out of it.